It's going to be fun Trust me 😂

Why ?🤷♂️

You see I wanted to do a project with Transfer Learning

But it also needs to be interesting for the humans who are going to read this

Then I suddenly got an idea

you see David(the goat) once Wisley said

humans are more likely drawn to famous people

So I can take that .... and apply my very very small knowledge of machine-learning

And Tada

An idea that no one had ever thought of it before arose from the ground

What is Transfer Learning? Anyways 🤔

I covered this topic vaguely in my last blog satellite classification

But let's get our hands a little dirty this time

And if you are doing this, right now...

In the end, if you stick to it you will do this

so let's get started, shall we?

**Read The Remaining Blog**(you are not going to regret it)

Transfer Learning overview

You see the name described by itself is Transfer we are just going to transfer knowledge from one object to another

Here the object represents our AI model

Ok, Is this what we are going to do to our AI model?

No not like this GIF but similar to the content it resembles

Then Why did I use this gif ...... it's not my idea

It's Stephan's idea

Ohh oh... I think we made Stephan angry.. let's not talk about this again

Let's Train our Classy the ai model

First, we need a data

I got it from Google Just searched Elon musk photos and tony stark photos

And got these 10 images for each class (people)

Problem with the normal approach

You see most of the time we build our model from the ground up to recognize the pattern between the given data

But a normal Dense layer that looks like this needs to have more images to recognize the patterns

so now we have this problem(or I intentionally created this problem) where there is no large data available for our model to understand the patterns between the two faces(Elon Vs Tony)

This is where we get into the Transfer Learning part of this blog

Transfer Learning:

Let me explain our (Elon vs Tony) problem context:

We take this Mobilenet model from Tensorflow_Hub Which is one of the most popular websites to take models which is already learned from a large number of datasets like ImageNet (Which had a million images and thousand classes to find)

And we get that model and train it on our 10 images so it can easily and quickly understand our ideas by just looking at a very small amount of data

In short, We only need a few images to train our AI Because it has already been trained on so many images(like our problem and more), that it know's how to learn the patterns in the given image quickly and efficiently with just a few image examples

How do humans identify a person's

You see, In Average We have 86 billion neurons in our brain that work together to make a pattern of a person's face so we can remember them

If I wanted to make 86 billion neurons even close to a billion neurons it's going to take a while... I mean quite literally

So here we can use the transfer learning method to train our model on 10 images for each class and predict them with the accuracy of 95%

Code :

MobileNet_Url = "https://tfhub.dev/google/imagenet/mobilenet_v3_large_100_224/classification/5"

train_dir = "/content/drive/MyDrive/Datasets/elonvsstark/"

class_names = ["elon","stark"]

import tensorflow as tf

from tensorflow.keras.preprocessing.image import ImageDataGenerator

m = ImageDataGenerator(rescale=1/255)

train_data = im.flow_from_directory(train_dir,batch_size=(32),target_size=(224,224),class_mode="categorical")

import tensorflow_hub as hub

mobilenet_layer = hub.KerasLayer(MobileNet_Url,trainable=False)

model = tf.keras.Sequential([mobilenet_layer, tf.keras.layers.Dense(2,activation="softmax")]) model.compile(loss="categorical_crossentropy",

optimizer="adam", metrics="accuracy")

model_history= model.fit(train_data,epochs=5,steps_per_epoch=len(train_data))

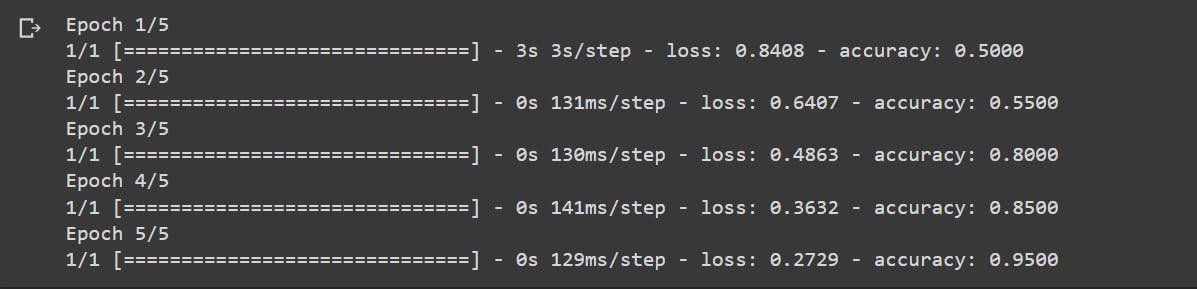

Output :

As we can see our Classy AI model got 95% accuracy by just looking at our 10 images 🔥

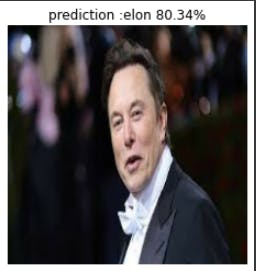

Let's see it in action 😎

Prediction :

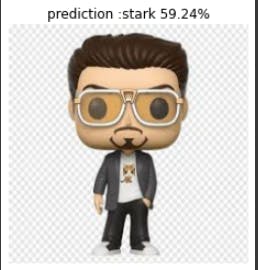

Let's Intentionally confuse our model because it's doing very well so far:🔥🎲

Well, I'm going to leave it with you to judge our model on this one, what do you think of this prediction value (where both of the classes are present in the same picture)

Conclusion

As deep learning goes we don't have to always create our own model from scratch you just have to know when to use and where to use our model

Here is my code: @sriramgithub

My LinkedIn: don't click here

If you think you are stuck or I didn't explain this clearly enough

Just let me know

until then

Bye from Me and Dany

Well, I think he is going to be busy...for another two days

Ok, then it's just me then

Bye, ( ̄︶ ̄)↗