How to make an ai model that can be used in space satellites

Overview of making a convolutional model to identify images from satellite

What is Satellite Imaging?

Isn't this a self-explanatory word "Imaging" ?

the process of making a visual representation of something by scanning it with a detector or electromagnetic beam

NO! (at least not for me 😅)

Because as I'm writing this, I just figured out that the meaning of Imaging is that definition and not

Take pictures with the camera

Hey! If you didn't know that definition too.. well, congrats now you do 🥳

Ok now you may ask what it actually says

Let's take the same satellite example: 🧪

You see "Satellite imaging or remote sensing is the scanning of the earth by satellite or high-flying aircraft in order to obtain information about it"

But the catch is There are many different satellites scanning the Earth, each with its own unique purpose

In general satellites use different kinds of sensors that can detect electromagnetic radiation that is reflected from the earth

They Actually use two different kinds of sensors to detect the radiation

- Passive

- Active

In One word

The passive sensor collects radiation that the sun emits and the earth reflects

The Active sensor is when the radiation is emitted by the satellite and analyzed after it is reflected back from the earth

A object which floats in space does this kind of stuff(Just thinking about this makes me feel like I'm a genius)

🐒➡👨💻

Well anyways...Let's get to the title of the blog

As the title says we need to create an ai model that can be used to categorize the images taken by the satellite

Why Do we need to do it?

Why Not

You see it's way easier when we use an AI 🤖 to categorize the images, So we can create other cool stuff in the meantime (like to write a blog about it 😁)

And it's my fun project about convolutional neural network

What is a convolutional neural network anyway?

A convolutional neural network (CNN) is a type of artificial neural network used in image recognition and processing that is specifically designed to process pixel data

It's going to be the brain of our AI model

Let's Get into the problem

First, we need data to work on

And here is where our friendly neighborhood Kaggle website comes in

What is Kaggle?

Kaggle is a website where you can find competitions to solve data science problems. It's free to join and it gives you the opportunity to practice your skills on real-world datasets in various industries.

The main thing is it also gives data for free 😎

Our Dataset is : RS(remote-sensing) satellite-images

let's Visualize our dataset 👀

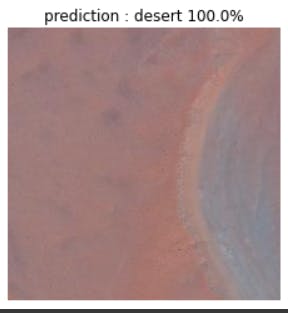

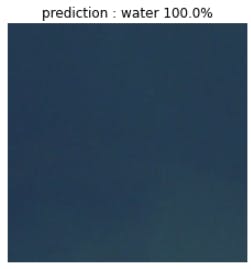

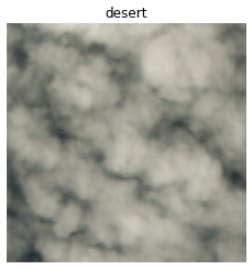

We are going to predict four categories/class ['cloudy', 'desert', 'green_area', 'water']

Code :

import random

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.image as mpim

def visualize_image(dir,class_name):

chossen_image = dir+"/"+class_name

random_image = random.sample(os.listdir(chossen_image),1)

img_data = mpim.imread(chossen_image + "/" + random_image[0] )

plt.figure(figsize=(10,7))

plt.imshow(img_data)

plt.title(class_name)

plt.axis(False)

dir = "/content/drive/MyDrive/Satellite Image/data"

visualize_image(dir,class_names[0])

Output :

There are more than 1000 images like this, In the above four classes to train our AI model

Now let's make a train and test dataset

Get it he is splitting his leg and we are splitting our dataset

Well anyways...

Code :

train_data = tf.data.Dataset.from_tensor_slices((x_train,y_train))

train_data = train_data.map(image_label).batch(32)

train_data

valid_data=tf.data.Dataset.from_tensor_slices((tf.constant(x_test),tf.constant(y_test))).map(image_label).batch(32)

valid_data

So now we got our data into two sets Training and Validation

But if you are already familiar with My blogs then you may ask what about the test set? 🤔

if you don't know what I'm talking about when I mentioned my blog just go here @justdoitanything who is a VERY Talented person recording his project in the type of blog about AI😚

Well anyways...

if you know what I'm talking about then yeah... this project contains a test set but I will use that dataset only to predict when everything is on their final run

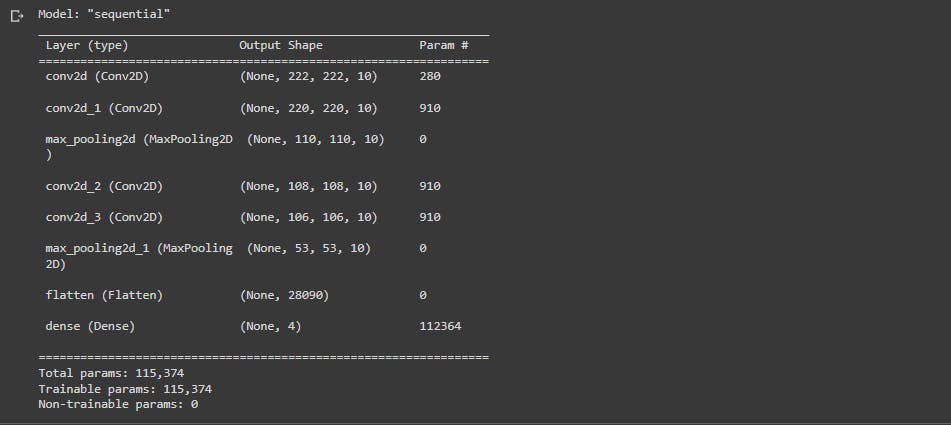

Creating An Pixie AI Model 🤖

Code :

Pixie = tf.keras.Sequential([tf.keras.layers.Conv2D(10,3,activation="relu",input_shape=(224,224,3)),

tf.keras.layers.Conv2D(10,3,activation="relu"),

tf.keras.layers.MaxPool2D(),

tf.keras.layers.Conv2D(10,3,activation="relu"),

tf.keras.layers.Conv2D(10,3,activation="relu"),

tf.keras.layers.MaxPool2D(),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(len(class_names),activation="softmax")])

Pixie.summary()

Output :

Let's fit this model in the dataset we created

Code :

Pixie.compile(loss="categorical_crossentropy",

optimizer="adam",

metrics="accuracy")

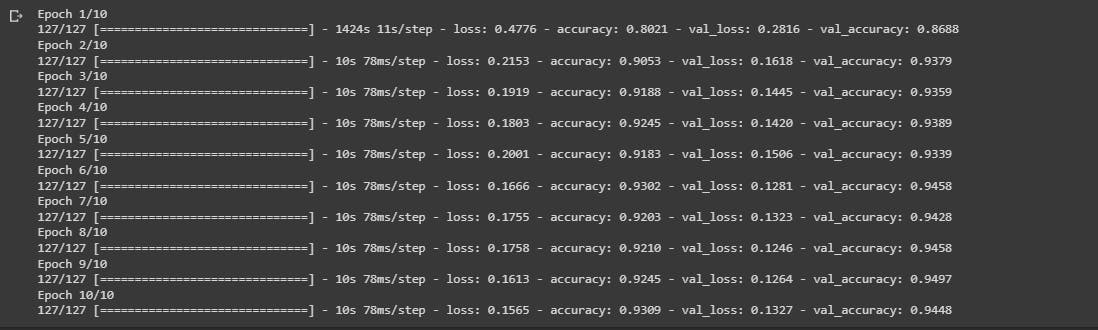

history_1 = Pixie.fit(train_data,epochs=10,steps_per_epoch=len(train_data),

validation_data=valid_data,validation_steps=len(valid_data))

Output :

And wow would you look at that 🥳 our Pixie AI got 94% accuracy

In humans terms, if you showed our Pixie 10 images it going to get 9 images right most of the time

which of course a really great thing 🔥

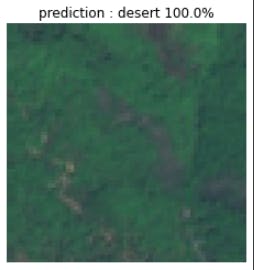

let's see some examples of our Pixie model predicting the validation images

You see it's predicting these two classes well, but when it comes to the other two classes

As you can see its not that good in both of the images

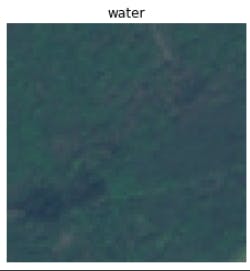

But in our model point of view, most of the images look like this

That kind of looks like a desert to me if you take the colors from it 😐

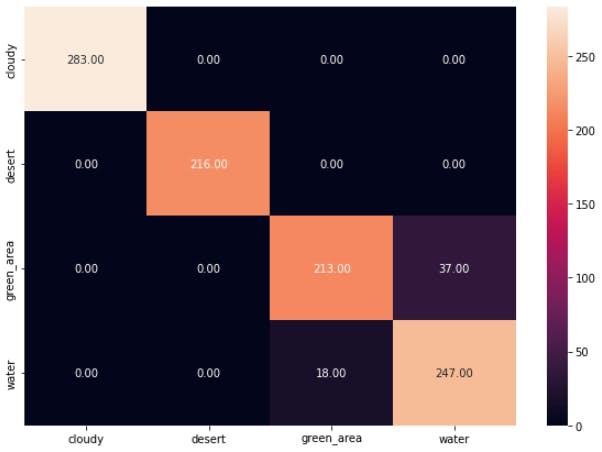

Let's look at the confusion matrix

As we can see again it getting confused with green_area(Forest) and water(ocean)

But Let's use a transfer learning model to predict this image

Transfer Learning

just like the word describes

Transfer learning is a research problem in machine learning that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem

For Example:

knowledge gained while learning to recognize cars(x project) could apply when trying to recognize trucks(y project), To distinguish between trucks and cars without even giving any large amount of car images to our model

Becoz it learned with a lot of images of cars in that x project.

Code :

from tensorflow.keras.applications.mobilenet_v2 import MobileNetV2

base_Pixie = MobileNetV2(input_shape = [224, 224, 3], weights = 'imagenet', include_top = False)

pool = tf.keras.layers.GlobalAveragePooling2D()

mid_layer = tf.keras.layers.Dense(100,activation = 'relu')

final_1 = tf.keras.layers.Dense(4,activation = 'softmax')

Pixie_1 = tf.keras.Sequential([base_Pixie, mid_layer,pool, final_1])

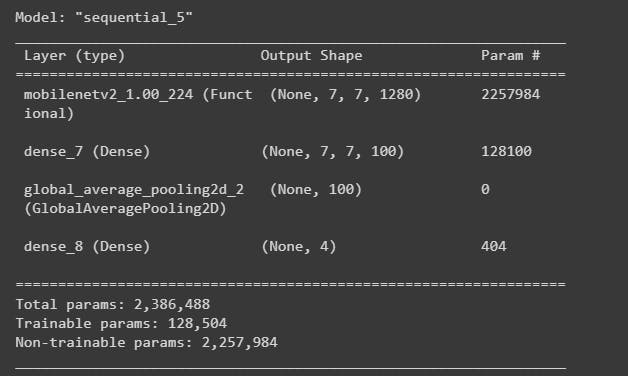

Pixie_1.summary()

Output :

Let's fit our model

Code :

Pixie_1.compile(loss = 'categorical_crossentropy',optimizer = 'adam',metrics = 'accuracy')

History = Pixie_1.fit(train_data,epochs = 15,steps_per_epoch=len(train_data),validation_data=valid_data,validation_steps=len(valid_data))

Output :

let's predict our Pixie AI model with test data

A test data that our model is never seen before

As we can see it's still getting confused with the image

And I think it's basically because the images of trees and water are not clear enough for our model

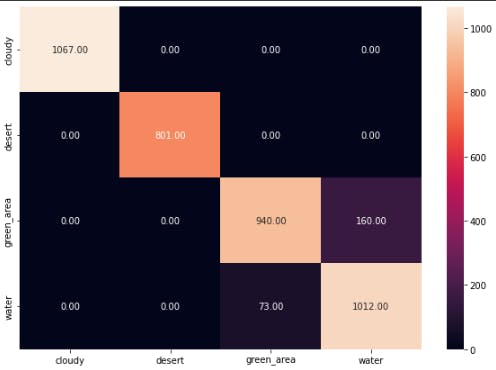

let's Look at the confusion matrix

As we can see it's not getting right even with a help of the powerful learning technique Transfer Learning

Conclusion

Let's leave our model here for now

Maybe in the future, if we get a large amount of data on trees and water we may predict every class right

Said that ψ(`∇´)ψ

Just look at that

Our model is doing great on the other two classes of our data 🔥💥

This is really a great performing model in that sense 👑

NOTE:🎯

This blog is just an overview of my process to make an AI predict the images

If you wanted in-depth of my code just go here @github

I will also make a Convolutional model blog in the future where I can go In-depth about that Learning process that goes behind the scene

Well thank you very much for your time ╰(°▽°)╯

Bye ~(=^‥^)ノ

- My Linkedin profile @sriram